Welcome to JKRobots.com

This web site shows my project work: starting from my PhD thesis on Relative mapping algorithms, to my earlier work in robotics of my undergraduate thesis and everything in between, including my Masters thesis on wireless media streaming and various other miscellaneous projects.

Enjoy,

Jay Kraut

email: jaykraut(at)gmail.com

PhD, University of Manitoba.

|

Here is the paper and the video. For more information about the Relative Algorithm go to my thesis presentation page which has video of a rehearsal of my thesis presentation Here is my updated Portfolio with my Post Doc work |

|

This is video of the Relative Point Algorithm. Here is a link to the paper. |

This is video of the Relative Plane Algorithm. Here is a link to the paper. |

|

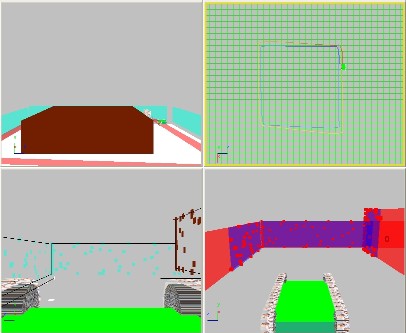

This is video is from my undergrad thesis of my robot that uses short range IR sensors, a sonar and shaft encoders to map and navigate an office, notice how close the actual result is to the simulation. Click on the link for more information. |

|

So how did I get from my undergraduate thesis to the Relative algorithms? Right after I completed my undergraduate thesis I wanted to continue the project and worked on developing a 3D map editor and simulation for computer vision. This video is a composite of my work in map editors and simulations. It features:

|

|

After I completed the 3D map editor and simulator I decided to join TRLabs to work on wireless video streaming for my Master's. This video, the Ant Simulation is on one of my favorite projects from that time period that was used to test AI algorithms for course work. My Master's thesis and other miscellaneous projects are available in this link. |

|

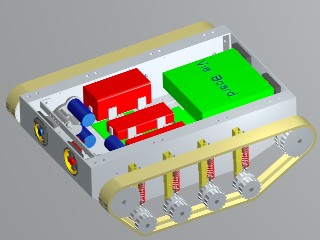

When I resumed my work on robotics for my PhD thesis, I started by attempting to construct a better robot, design and build the electronics, and worked on an FPGA to learn about implementing vision algorithms on it. The electronics and the FPGA mostly worked but I realized I would not be able to achieve my goal of real-time mapping with this route, mainly due to the FPGA's slow transfer speed. |

|

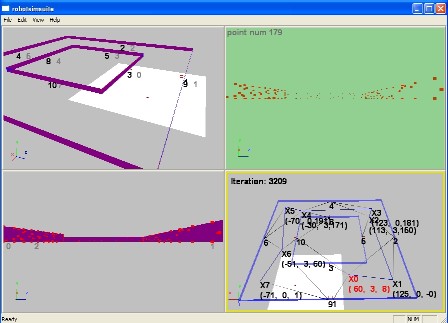

Instead of using a photo realistic simulator I decided it made more sense just to use a point and line simulation that might be the output of a vision algorithm. I resurrected the 3D simulation and added simulated point and line detection. I used the points with an EM algorithm to form planes, and those to form a map. After several attempts at SLAM using planes as the input I finally figured out the Relative Plane algorithm. |

|

I have an extensive project log that I wrote when developing the Relative Plane algorithm. It might be interesting since it shows how I developed the algorithm from a basic idea. |

|

After I completed the Relative Plane algorithm I realized that the algorithm did two things well. It filtered noise and it identified the static edge of a plane as it enters and leaves the viewpoint. I wondered if is it possible to use the idea behind the Relative Plane algorithm and implement a version that works with points that is able to filter out noise and identify static points versus dynamic points in real time. This led to the Relative Point algorithm seen above. |